3 A review and analysis of current digital self-control tools

To explore how this model may be useful in mapping digital self-control interventions, we conducted a systematic review and analysis of apps on the Google Play and Apple App stores, as well as browser extensions on the Chrome Web store. We identified apps and browser extensions described as helping users exercise self-control / avoid distraction / manage addiction in relation to digital device use, coded their design features, and mapped them to the components of our dual systems model4.

3.1 Methods

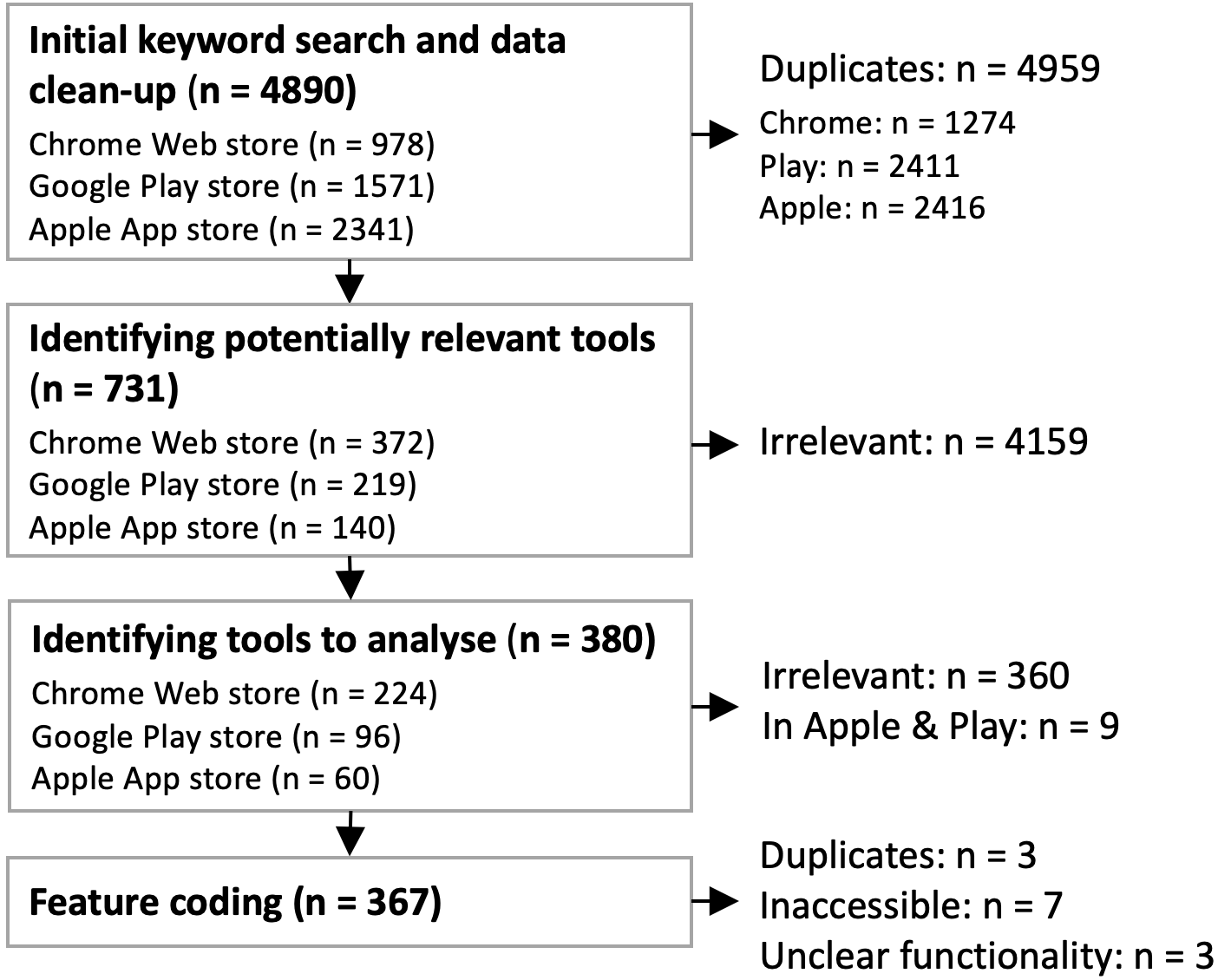

3.1.1 Initial Keyword Search and Data Clean Up

For the Google Play and Apple App store, we used pre-existing scripts (Olano 2018b, 2018a) to download search results for the terms ‘distraction’, ‘smartphone distraction’, ‘addiction’, ‘smartphone addiction’, ‘motivation’, ‘smartphone motivation’, ‘self-control’ and ‘smartphone self-control’. For the Chrome Web store, we developed our own scraper (Slack 2018) and downloaded search results for the same key terms, but with the prefix ‘smartphone’ changed to ‘laptop’ as well as ‘internet’ (e.g. ‘laptop distraction’ and ‘internet distraction’). We separately scraped apps and extensions on the US and UK stores, between 22nd and 27th August 2018. After excluding duplicate results returned by multiple search terms and/or by both the US and UK stores, this resulted in 4890 distinct apps and extensions (1571 from Google Play, 2341 from the App Store, and 978 from the Chrome Web store).

3.1.2 Identifying Potentially Relevant Apps and Extensions

Following similar reviews (Shen et al. 2015; Stawarz et al. 2018), we then manually screened the titles and short descriptions (if available; otherwise the first paragraphs of the full description). We included apps and extensions explicitly designed to help people self-regulate their digital device use, while excluding tools intended for general productivity, self-regulation in other domains than digital device use, or which were not available in English (for detailed exclusion criteria, see osf.io/zyj4h).

This resulted in 731 potentially relevant apps and extensions (219 from Google Play, 140 from the App Store, and 372 from the Chrome Web store).

3.1.3 Identifying Apps and Extensions to Analyse

We reviewed the remaining tools in more detail by reading their full descriptions. If it remained unclear whether an app or extension should be excluded, we also reviewed its screenshots. If an app existed in both the Apple App store and the Google Play store, we dropped the version from the Apple App store.5

After this step, we were left with 380 apps and extensions to analyse (96 from Google Play, 60 from the App Store, and 224 from the Chrome Web store).

Figure 3.1: Flowchart of the search and exclusion/inclusion procedure

3.1.4 Feature coding

Following similar reviews, we coded functionality based on the descriptions, screenshots, and videos available on a tool’s store page (cf. Stawarz, Cox, and Blandford 2014, 2015; Stawarz et al. 2018; Shen et al. 2015). We iteratively developed a coding sheet of feature categories (cf. Bender et al. 2013; Orji and Moffatt 2018), with the prior expectation that the relevant features would be usefully classified as subcategories of the main feature clusters ‘block/removal’, ‘self-tracking’, ‘goal advancement’ and ‘reward/punishment’ (drawing on our previous work in this area (Lyngs 2018a)).

Initially, three of the authors independently reviewed and classified features in 10 apps and 10 browser extensions (for a total of 30 unique apps and 30 unique browser extensions) before comparing and discussing the feature categories identified and create the first iteration of the coding sheet. Using this coding sheet, two authors independently reviewed 60 additional apps and browser extensions each and a third author these 120 tools, as well as all remaining. After comparing and discussing the results, a final codebook was developed, on the basis of which the first author revisited and recoded the features in all tools. In addition to the granular feature coding, we noted which main feature cluster(s) represented a tool’s ‘core’ design, according to the guideline that 25% or more of the tool’s functionality related to that cluster (a single tool could belong to multiple clusters).6

During the coding process, we excluded a further 13 tools - 3 duplicates, e.g. where ‘pro’ and ‘lite’ versions had no difference in described functionality, 7 that had become inaccessible after the initial search, and 3 that lacked sufficiently well-described functionality to be coded. This left 367 tools in the final dataset.

3.2 Results

3.2.1 Feature prevalence

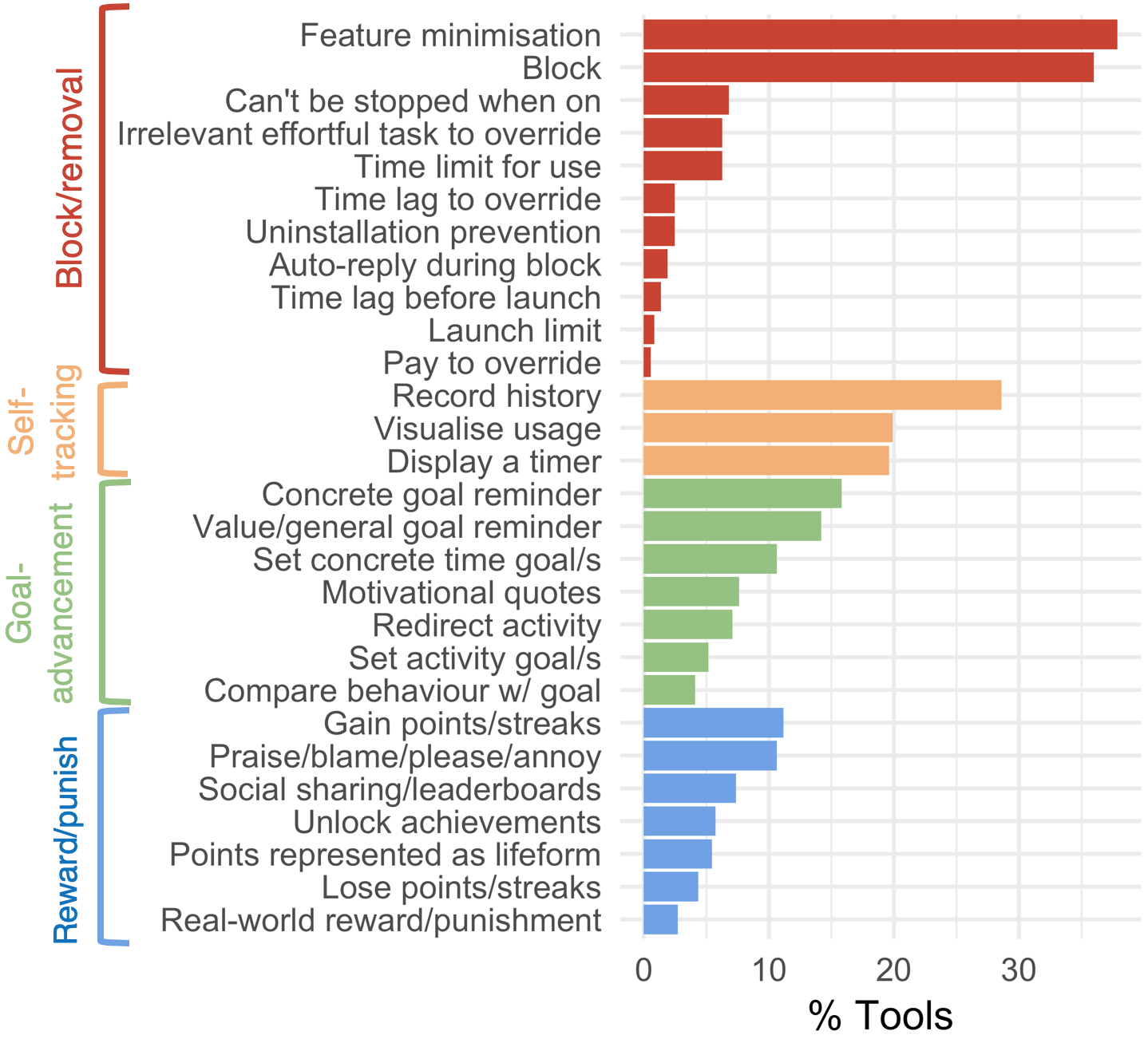

Figure 3.2: Functionality of digital self-control tools (N = 367)

A summary of the prevalence of features is shown in Figure 3.2. The most frequent feature cluster related to blocking or removing distractions, some variation of which was present in 74% of tools. 44% (163) enabled the user to put obstacles in the way of distracting functionality by either blocking access entirely (132 tools), or by setting limits on how much time could be spent (23 tools) or how many times distracting functionality could be launched (3 tools) before being blocked, or by adding a time lag before distracting functionality would load (5 tools). 14% of tools (50) also added friction if the user attempted to remove the blocking, including disallowing a blocking session from being stopped (25 tools), requiring the user to first complete an irrelevant effortful task or type in a password (23 tools), tinkering with administrator permissions to prevent the tool from being uninstalled (9 tools), or adding a time lag before the user could override blocking or change settings (9 tools). For example, the Focusly Chrome extension (Trevorscandalios 2018) blocks sites on a blacklist; if the user wishes to override the blocking, she must type in correctly a series of 46 arrow keys (e.g. \(\rightarrow\uparrow\downarrow\rightarrow\leftarrow\rightarrow\)...) correctly to enter the blocked site.

Rather than blocking content per se, an alternative approach, taken by 38% of tools (139), was to reduce the user’s exposure to distracting options in the first place. This approach was dominated by browser extensions (121 of these tools were from the Chrome Web store) typically in the form of removing elements from specific sites (67 tools; e.g. removing newsfeeds from social media sites or hiding an email inbox). The sites most frequently targeted were Facebook (26 tools), YouTube (17), Twitter (11) and Gmail (7). Also popular were general ‘reader’ extensions for removing distracting content when browsing the web (27 tools) or when opening new tabs (24). Other notable examples were ‘minimal-writing’ tools (22 tools) which remove functionality irrelevant to, or distracting from, the task of writing. Finally, a few Android apps (4 tools) limited the amount of functionality available on devices’ home screen.

The second most prevalent feature cluster related to self-tracking, some variation of which was present in 38% of tools (139). Out of these, 105 tools recorded the user’s history, 73 provided visualisations of the captured data, and 72 displayed a timer or countdown. In 42 tools, the self-tracking features included focused on the time during which the user managed to not use their digital devices, such as the iOS app Checkout of your phone (Schungel 2018).

The third most prevalent feature cluster related to goal advancement, some variation of which was present in 35% of tools (130). 58 tools implemented reminders of a concrete time goal or task the user tried to complete (e.g. displaying pop-ups when a set amount of time has been spent on a distracting site or by replacing the content of newsfeeds or new tabs with todo-lists) and 52 tools provided reminders of more general goals or personal values (e.g. in the form of motivational quotes). 58 tools asked the user to set explicit goals, either for how much time they wanted to spend using their devices in total or in specific apps or websites (39 tools), or for the tasks they wanted to focus on during use (19 tools). 15 tools allowed the user to compare their actual behaviour against the goals they set.

The fourth most prevalent feature cluster, present in 22% of tools (80), related to reward/punishment, i.e. providing some rewards or punishments for the way in which a device is used. Some of these features were gamification interventions such as collecting points/streaks (41 tools), leaderboards or social sharing (27), or unlocking of achievements (21). In 20 tools, points were represented as some lifeform (e.g. an animated goat or a growing tree) which might be harmed if the user spent too much time on certain websites or used their phone during specific times. 10 tools added real-world rewards or punishments, e.g. making the user lose money if they spend more than 1 hour on Facebook in a day (Timewaste Timer (Prettymind.co 2018)), allowing virtual points to be exchanged to free coffee or shopping discounts (MILK (Milk The Moment Inc. 2018)) or even let the user administer herself electrical shocks when accessing blacklisted websites (!) (PAVLOK (Pavlok 2018)).

Finally, 35% of tools (129) gave the user control over what counted as ‘distraction’, e.g. by letting the user customise which apps or which websites to restrict access to. Among tools implementing blocking functionality, 101 tools implemented blacklists (i.e. blocking specific apps or sites, allowing everything else), while 22 tools implemented whitelists (i.e. allowing only specified apps or sites while blocking everything else).

3.2.2 Feature combinations

65% of tools had only one feature cluster at the core of their design, the most frequent of which was blocking/removing distractions (53%). 32% (117 tools) combined two main feature clusters, most frequently block/removal in combination with goal-advancement (40 tools; e.g. replacing the Facebook newsfeed with a todo list, or replacing distracting websites with a reminder of the task to be achieved) or self-tracking in combination with reward/punishment features (30 tools; e.g. a gamified pomodoro timer in which an animated creature dies if the user leaves the app before the timer runs out). Block/removal core designs were also commonly combined with self-tracking (24 tools; e.g. blocking distracting websites while a timer counts down, or recording and displaying how many times during a block session the user tried to access blacklisted apps). Only two tools (Flipd (Flipd Inc. 2018) and HabitLab (Stanford HCI Group 2018)) combined all four feature clusters in their core design, with the Chrome extension HabitLab (developed by the Stanford HCI Group) cycling through different types of interventions to learn which best help the user align internet use with their stated goals (cf. Kovacs, Wu, and Bernstein 2018).

3.2.3 Store comparison

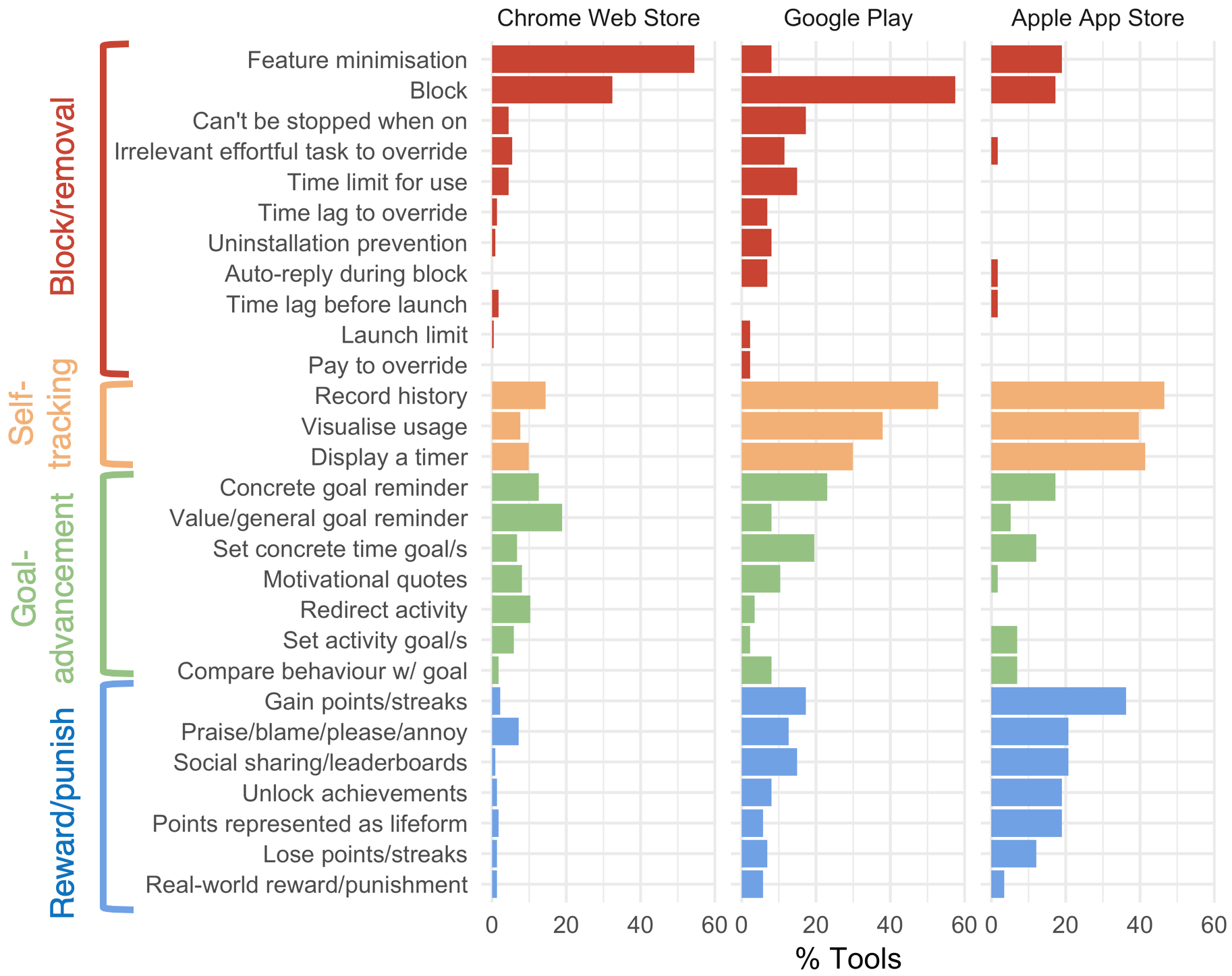

Figure 3.3: Functionality of digital self-control tools on Chrome Web (n = 223), Google Play (n = 86) and Apple App Store (n = 58)

Figure 3.3 summarises the prevalence of features, comparing the three stores. The differences between the stores appear to mirror the granularity of system control available to developers: Feature minimisation, in the form of removing particular aspects of the user interface, is common in browser extensions, presumably because developers here can wield precise control over the elements displayed on HTML pages by injecting client-side CSS and JavaScript. On mobile devices, however, developers have little control over how another app is displayed, leaving blocking or restricting access as the only viable strategies. The differences between the Android and iOS ecosystems are apparent, as the permissions necessary to implement e.g. scheduled blocking of apps are not available to iOS developers. These differences across stores suggest that if mobile operating systems granted more permissions (as some developers of popular anti-distraction tools have petitioned Apple to do (Digital Wellness Warriors 2018)), developers would respond by creating tools that offer more granular control of the mobile user interface, similar to those that already exist for the Chrome web browser.

3.2.4 Mapping identified tool features to theory

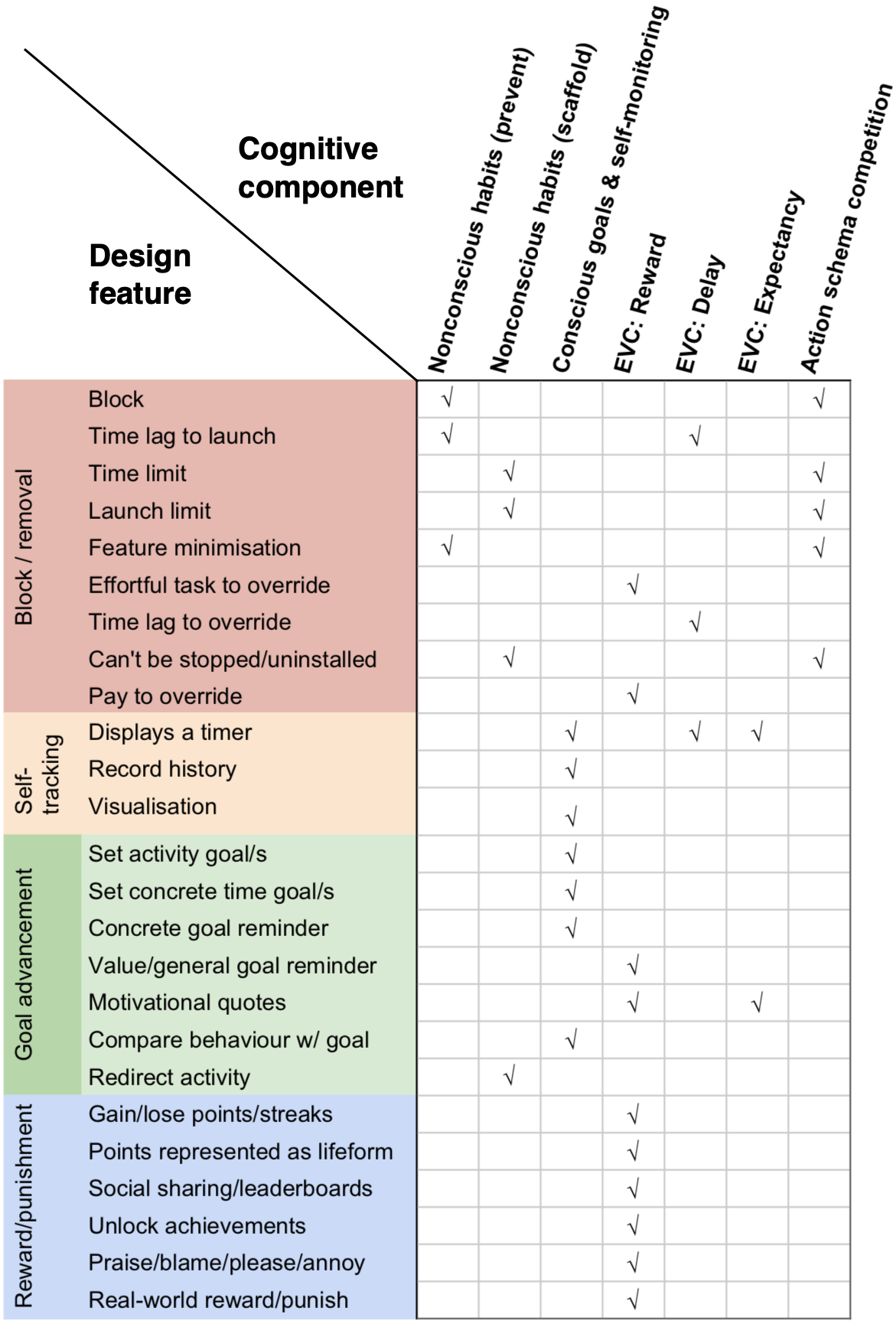

Figure 3.4: Mapping of design features to an integrative dual systems model of self-regulation

Figure 3.4 shows a matrix of how the design features corresponds to the main components of the integrative dual systems model, in terms of the cognitive components they have the most immediate potential to influence: Non-conscious habits are influenced by features that block the targets of habitual action or the user interface elements that trigger them, thereby preventing unwanted habits from being activated. Non-conscious habits are also influenced by features which enforce limits on daily use, or redirect user activity, thereby scaffolding formation of new habits. Conscious goals & self-monitoring is influenced by explicit goal setting and reminders, as well as by timing, recording, and visualising usage and comparing it with one’s goals. The reward component of the expected value of control is influenced by reward/punishment features that add incentives for exercising self-control, as well as by value/general goal reminders and motivational quotes which encourage the user to reappraise the value of immediate device use in light of what matters in their life; the delay component is influenced by time lags or timers; and expectancy is similarly influenced by timers (‘I should be able to manage to control myself for just 20 minutes!’) as well as motivational quotes. Finally, the action schema competition, which ultimately controls behaviour, is most directly affected by blocking/removal functionality that hinders unwanted responses from being expressed by simply making them unavailable.

Figure 3.5: Percentage of tools which include at least one design feature targeting a given cognitive component of the dual systems model of self-regulation.

Given this mapping, the percentages of tools in which at least one design feature maps to a given cognitive component is shown in Figure 3.5. Similarly to DBCI reviews (Pinder et al. 2018; Stawarz, Cox, and Blandford 2015), we find the lowest prevalence of features that scaffold formation of non-conscious habits (18%), followed by features that influence the delay component of the expected value of control (23%). The current landscape of digital self-control tools is dominated by features which prevent activation of unwanted non-conscious habits (73%) and thereby stop undesirable responses from winning out in action schema competition by making them unavailable.

References

Olano, Facundo. 2018b. “Google-Play-Scraper.” https://github.com/facundoolano/google-play-scraper.

Olano, Facundo. 2018a. “App-Store-Scraper.” https://github.com/facundoolano/app-store-scraper.

Slack, Adam. 2018. “Chrome-Web-Store-Scraper.” https://github.com/AdamSlack/chrome-web-store-scraper.

Shen, Nelson, Michael-Jane Levitan, Andrew Johnson, Jacqueline Lorene Bender, Michelle Hamilton-Page, Alejandro (Alex) R Jadad, and David Wiljer. 2015. “Finding a Depression App: A Review and Content Analysis of the Depression App Marketplace.” JMIR mHealth and uHealth 3 (1): e16. https://doi.org/10.2196/mhealth.3713.

Stawarz, Katarzyna, Chris Preist, Debbie Tallon, Nicola Wiles, and David Coyle. 2018. “User experience of cognitive behavioral therapy apps for depression: An analysis of app functionality and user reviews.” Journal of Medical Internet Research 20 (6). https://doi.org/10.2196/10120.

Stawarz, Katarzyna, Anna L Cox, and Ann Blandford. 2014. “Don’t Forget Your Pill!: Designing Effective Medication Reminder Apps That Support Users’ Daily Routines.” In Proceedings of the Sigchi Conference on Human Factors in Computing Systems, 2269–78. CHI ’14. New York, NY, USA: ACM. https://doi.org/10.1145/2556288.2557079.

Stawarz, Katarzyna, Anna L Cox, and Ann Blandford. 2015. “Beyond Self-Tracking and Reminders: Designing Smartphone Apps That Support Habit Formation.” In Proceedings of the 33rd Annual Acm Conference on Human Factors in Computing Systems, 2653–62. CHI ’15. New York, NY, USA: ACM. https://doi.org/10.1145/2702123.2702230.

Bender, Jacqueline Lorene, Rossini Ying Kwan Yue, Matthew Jason To, Laetitia Deacken, and Alejandro R Jadad. 2013. “A Lot of Action, But Not in the Right Direction: Systematic Review and Content Analysis of Smartphone Applications for the Prevention, Detection, and Management of Cancer.” J Med Internet Res 15 (12): e287. https://doi.org/10.2196/jmir.2661.

Orji, Rita, and Karyn Moffatt. 2018. “Persuasive technology for health and wellness: State-of-the-art and emerging trends.” Health Informatics Journal 24 (1): 66–91. https://doi.org/10.1177/1460458216650979.

Lyngs, Ulrik. 2018a. “A Cognitive Design Space for Supporting Self-Regulation of ICT Use.” In Extended Abstracts of the 2018 Chi Conference on Human Factors in Computing Systems, SRC14:1—–SRC14:6. CHI Ea ’18. New York, NY, USA: ACM. https://doi.org/10.1145/3170427.3180296.

Trevorscandalios. 2018. “Focusly.” https://chrome.google.com/webstore/detail/focusly/jlihnplddpebplnfafhdanaiapbeikbk?hl=gb.

Schungel, Willem. 2018. “Checkout of your phone.” https://itunes.apple.com/gb/app/checkout-of-your-phone/id1051880452?mt=8&uo=4.

Prettymind.co. 2018. “Timewaste Timer.” https://chrome.google.com/webstore/detail/timewaste-timer/pengblgbipcdkpigibniogojheaokckd?hl=gb.

Milk The Moment Inc. 2018. “The MILK App.” https://itunes.apple.com/gb/app/the-milk-app/id1340000116?mt=8&ign-mpt=uo%3D4.

Pavlok. 2018. “PAVLOK Productivity.” https://chrome.google.com/webstore/detail/pavlok-productivity/hefieeppocndiofffcfpkbfnjcooacib?hl=gb.

Flipd Inc. 2018. “Flipd.” https://play.google.com/store/apps/details?id=com.flipd.app&hl=en&gl=us.

Stanford HCI Group. 2018. “HabitLab for Chrome (Extension for Google Chrome web browser).” Stanford HCI Lab. https://habitlab.stanford.edu/.

Kovacs, Geza, Zhengxuan Wu, and Michael S Bernstein. 2018. “Rotating Online Behavior Change Interventions Increases Effectiveness but Also Increases Attrition.” Proc. ACM Hum.-Comput. Interact. 2 (CSCW). New York, NY, USA: ACM: 95:1—–95:25. https://doi.org/10.1145/3274364.

Digital Wellness Warriors. 2018. “Apple: let developers help iPhone users with mental wellbeing.” https://www.change.org/p/apple-allow-digital-wellness-developers-to-help-ios-users.

Pinder, Charlie, Jo Vermeulen, Benjamin R. Cowan, and Russell Beale. 2018. “Digital Behaviour Change Interventions to Break and Form Habits.” ACM Transactions on Computer-Human Interaction 25 (3): 1–66. https://doi.org/10.1145/3196830.

Data and scripts for reproducing our analyses (as well as this paper written in R Markdown (cf. Lyngs 2018b)) are available on osf.io/zyj4h.↩

Apple’s iOS places more restrictions on developer access to operating system permissions than does Google’s Android, with the consequence that the iOS version of a digital self-control app is often much more limited than its Android counterpart (Mosemghvdlishvili and Jansz 2013). Because the purpose of our review was to investigate which areas of the design space these tools have been explored (rather than differences between iOS and Android ecosystems per se), we excluded the iOS version when an app was available in both stores.↩

For further detail, see osf.io/zyj4h.↩