3 Characterising the Design Space

3.1 Introduction

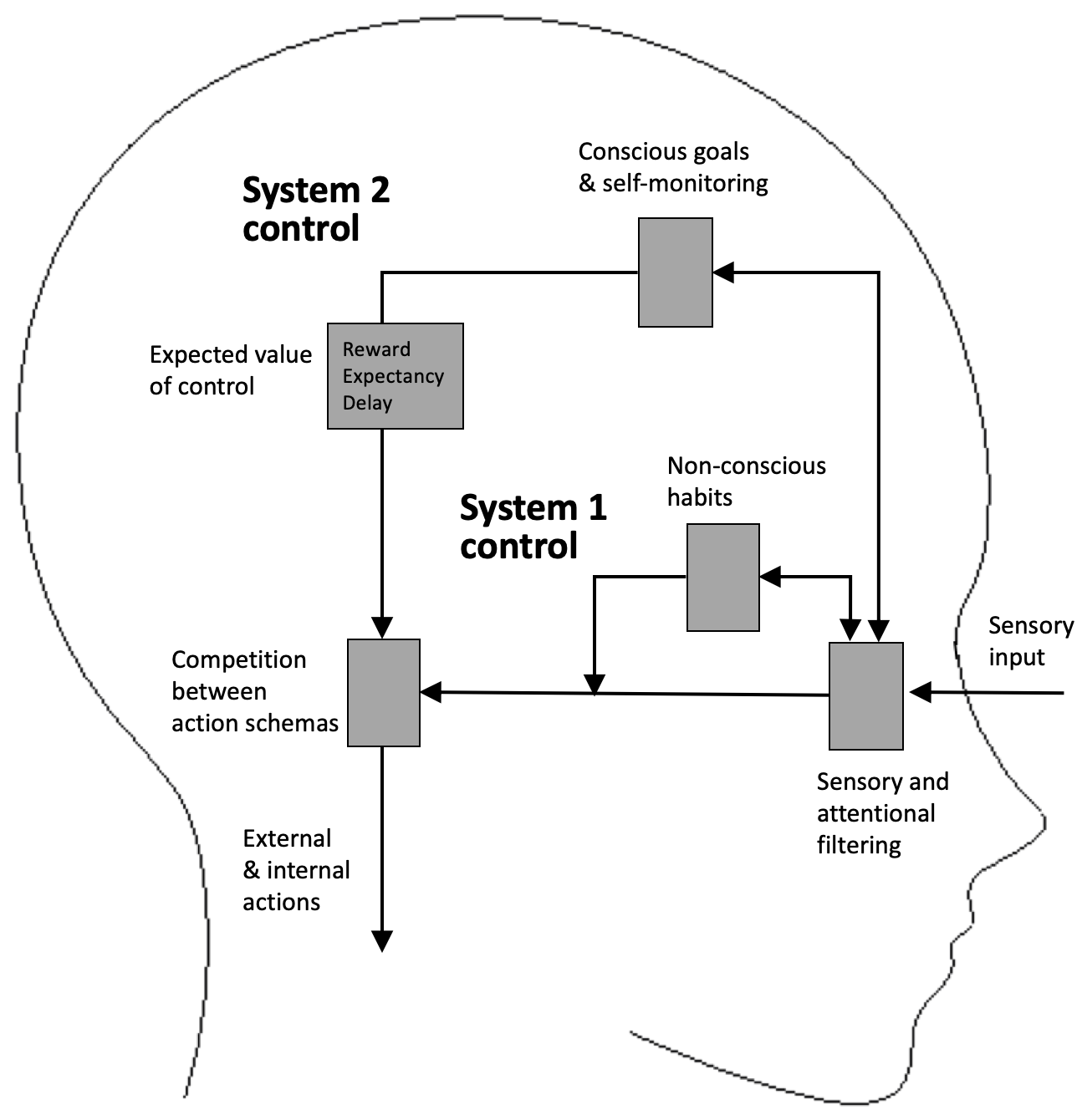

To answer this thesis’ main research question of how existing digital self-control tools can help us identify effective design patterns for supporting self-control over digital device use, we begin in this chapter by drawing on dual systems theory and exploring how it can be used to systematise and classify existing tools. We start by outlining the basics of the underlying psychological research. In doing so, we extend current applications of dual systems theories in research on digital behaviour change interventions (DBCIs) (Adams et al. 2015; Cox et al. 2016; Pinder et al. 2018, 2017) with the concept of ‘expected value of control.’ The neuroscience literature considers this concept central in explaining why success at self-control fluctuates over time and with emotional state (M. Botvinick and Braver 2015; Hendershot et al. 2011; Inzlicht, Schmeichel, and Macrae 2014; Ryan et al. 2014; Shenhav, Botvinick, and Cohen 2013; Tice, Bratslavsky, and Baumeister 2001). An overview of the resulting model is shown in Figure 3.1.

Subsequently, we review current digital self-control tools on the Chrome Web, Google Play, and Apple App stores, and apply the dual systems framework to organise and explain common design features, before pointing out gaps and opportunities for future work.

3.2 An integrative dual systems framework for digital self-control

Figure 3.1: An extended dual systems model of self-regulation, developed from Shea et al. (2014) and D. A. Norman and Shallice (1986). System 1 control is rapid and non-conscious, whereas System 2 control is slower, conscious, and capacity-limited. The strength of System 2 control is mediated by the expected value of control. Both systems influence competition between action schemas, the outcome of which causes behaviour.

3.2.1 System 1 and System 2

The core of dual systems theories is a major distinction between swift, parallel and non-conscious ‘System 1’ processes, and slower, capacity-limited and conscious ‘System 2’ processes (R. P. Cooper, Ruh, and Mareschal 2014; Kahneman 2011; E. K. Miller and Cohen 2001; B. T. Miller and D’Esposito 2005; Shea et al. 2014; Shiffrin and Schneider 1977; Smith and DeCoster 2000).2

System 1 control is driven by environmental inputs and internal states along with cognitive pathways that map the current situation to well-learned habits or instinctive responses (E. K. Miller and Cohen 2001). Behaviour driven by System 1 is often called ‘automatic,’ because System 1 control allows tasks to be initiated or performed without conscious awareness and with little interference with other tasks (D. A. Norman and Shallice 1986). Instinctive responses like scratching mosquito bites, or frequent patterns of digital device use like picking up one’s smartphone to check for notifications, can happen automatically via System 1 control (cf. M. Botvinick and Braver 2015; Oulasvirta et al. 2012; Van Deursen et al. 2015).

System 2 control is driven by goals, intentions, and rules held in conscious working memory (Baddeley 2003; B. T. Miller and D’Esposito 2005). From these central representations, signals are sent to cognitive systems that process sensory input, memory retrieval, emotional processing, and behavioural output, to guide responses accordingly (E. K. Miller and Cohen 2001). System 2 control is necessary when a goal requires planning or decision-making, or overcoming of habitual responses or temptations (D. A. Norman and Shallice 1986), for example if one has a goal of not scratching mosquito bites or not checking a smartphone notification.

3.2.2 Action schema competition

From a neuroscience perspective, the building blocks of behaviour are hierarchical action schemas, that is, control units for partially ordered sequences of action that achieve some goal when performed in the appropriate order (R. Cooper and Shallice 2000; D. A. Norman and Shallice 1986). Action schemas exist at varying levels of complexity, from simple single-action motor schemas for grasping and twisting, to higher level schemas for, e.g., preparing tea by filling and boiling a kettle (M. M. Botvinick 2008; Shallice and Cooper 2011). The schemas compete for control over behaviour in a ‘competitive selection’ process in which schemas act like nodes in a network, each with a continuous activation value (Shallice and Cooper 2011), and the ‘winner’ is the node with the strongest activation (Knudsen 2007; Pinder et al. 2018). Schema nodes are activated by a number of sources, including sensory input via System 1 processes (‘bottom-up’), ‘parental’ influence from super-ordinate schemas in the hierarchy, and top-down influence from System 2 control (R. Cooper and Shallice 2000; Shallice and Cooper 2011).

3.2.3 Self-regulation and self-control

Following others, we use self-regulation as umbrella term for regulatory processes in the service of goal-directed behaviour, including automatic System 1 habits, and self-control more restrictively for conscious and deliberate System 2 control in situations where immediate impulses conflict with enduringly valued goals (Baumeister, Vohs, and Tice 2007; Angela L. Duckworth, Gendler, and Gross 2014; Hagger et al. 2010; Milyavskaya and Inzlicht 2018). For example, if a person wishes to be less distracted by her smartphone in social situations, and through repetition has acquired a habit of turning the phone face-down to the point that she now does it without conscious attention, this counts as self-regulation. If in a given moment she feels an urge to flip it over and check for notifications, but consciously suppresses this impulse and does not act on it, this counts as self-control.

Self-regulation and self-control are mediated by feedback functions for monitoring the state of oneself and the environment, comparing this state to goals and standards (cf. Shea et al. 2014), and acting to modify the situation accordingly (Carver and Scheier 1981, 1998; Powers 1973; cf. cybernetic models of behaviour control, cf. Baumeister and Exline 2000; Inzlicht, Legault, and Teper 2014).

3.2.4 Attentional filtering

For goals, rules, or intentions to guide System 2 control, they must first enter working memory (Baddeley 2003; B. T. Miller and D’Esposito 2005). Entry of information from the external world, internal states, or memory stores into working memory is itself a competitive process, in which the signals with the highest activation values are given access by attentional filters (Constantinidis and Wang 2007; Knudsen 2007; E. K. Miller and Cohen 2001):

Automatic bottom-up filters look out for stimulus properties that are likely to be important, either through innate sensitivities (e.g., sudden or looming noises) or learned associations (e.g., a smartphone notification) and boost their signal strength (Knudsen 2007). In this way, some stimuli may evoke a response strong enough to gain automatic access to working memory even while we have our minds on other things (Egeth and Yantis 1997; Itti and Koch 2001). For example, clickbait may use headlines and imagery with properties that makes bottom-up attention filters put its information on a fast track to conscious working memory or trigger click-throughs via System 1 control (cf. Blom and Hansen 2015).

Conscious System 2 control can also direct attention towards particular internal or external sources of information (e.g., focusing on a distorted voice in one’s cellphone on a crowded train), which increases the signal strength of those sources and makes the information they carry more likely to enter working memory (Corbetta et al. 1991; Knudsen 2007; Müller, Philiastides, and Newsome 2005).

3.2.5 Self-control limitations and the Expected Value of Control

A central puzzle is why people often fail to act in accordance with their own valued goals, even when they are aware of the mismatch (A. L. Duckworth, Gendler, and Gross 2016). According to current research on cognitive control, the two key factors to answer this question are (i) limitations on System 2 control in relation to capacity; and (ii) fluctuations due to emotional state and fatigue (M. Botvinick and Braver 2015).

Capacity limitations

The amount of information that can be held in working memory and guide System 2 control, is limited (classically ‘seven, plus or minus two’ chunks of meaningful information, Cowan (2010); G. A. Miller (1956)). Therefore, self-control can fail if the relevant goals are simply not represented in working memory at the time of action (Kotabe and Hofmann 2015). This is one explanation for why people often struggle to manage use of e.g. Facebook or email — one opens the application with a particular goal in mind, but information from the newsfeed or inbox hijacks attention and crowds out the initial goal (cf. section 5.5.8.1).

Fluctuations due to emotional state and fatigue

System 2 control often suffers from fatigue effects if exerted continuously (Blain, Hollard, and Pessiglione 2016; Chance et al. 2012; Dai et al. 2015; Hagger et al. 2010; Hockey 2013) and also fluctuates with emotional state (Tice, Bratslavsky, and Baumeister 2001). For example, negative mood is a strong predictor of relapse of behaviour people attempt to avoid (Hendershot et al. 2011; W. R. Miller et al. 1996; Tice, Bratslavsky, and Baumeister 2001), and studies of Facebook use have specifically found that users are worse at regulating the time they spend on the platform when in a bad mood (Ryan et al. 2014; cf. Montag et al. 2017)

The emerging consensus explanation of these fluctuations is that the strength of System 2 control is mediated by a cost-benefit analysis of the outcome that exercising self-control might bring about (M. Botvinick and Braver 2015), known as the expected value of control (EVC) (Shenhav, Botvinick, and Cohen 2013)3. The research suggests that EVC is influenced by at least three major factors:

First, EVC increases the more reward people perceive they could obtain (or the greater the loss that could be avoided) through successful self-control (Adcock et al. 2006; M. Botvinick and Braver 2015; Padmala and Pessoa 2010, 2011). To illustrate, consider ‘phone stack,’ a game in which a group dining at a restaurant begin by stacking up their phones on the table. The first person to take out their phone from the stack to check it, has to pay the entire bill (Ha 2012; Tell 2013). This game aids self-control over device use by introducing a financial (and reputational) cost which adjusts the overall expected value of control (cf. Ko et al. (2015)’s app NUGU, which leveraged social incentives to help participants reduce smartphone use, see Table 2.1).

Second, EVC increases the greater the expectancy, or perceived likelihood, that one will be able to bring a given outcome about through self-control (Bernoulli 1954; Steel and König 2006; Vroom 1964; cf. ‘self-efficacy’ in Social Cognitive Theory, Bandura 1991). In the phone stack example, people may try harder to suppress an urge to check their phone, the more confidence they have in their ability to control themselves in the first place.

Third, EVC decreases the longer the delay before the outcome that self-control might bring about (cf. ‘future discounting,’ Ainslie (2001); Ainslie (2010); Ariely and Wertenbroch (2002); Critchfield and Kollins (2001); O. Evans et al. (2016); McClure et al. (2007); O’Donoghue and Rabin (2001)). In phone stack, we should expect people to be worse at suppressing an impulse to check their phone if the rules were changed so that the loser would only pay the bill for a meal in a year’s time.

3.2.6 A practical example

As a concrete illustration of the dual systems framework and the benefits of including EVC, consider a student who opens his laptop to work on an essay. However, he instead checks Facebook and spends an inordinate amount of time scrolling the newsfeed, experiencing feelings of regret having done so when he finally returns to the essay. This is not the first time it happens, even though his reflective goal is always to do solid work on the essay as the first thing, and to only allow himself to check Facebook briefly during breaks.

The dual systems framework suggests we consider this situation in terms of the perceptual cues in the context, automatic System 1 behaviour control, System 2’s consciously held goals and self-monitoring, and System 2’s expected value of control:

If the student normally checks Facebook when opening his laptop, this context may trigger a habitual check-in via System 1 control. His goal of working first thing might be present in his working memory, but he may fail to override his checking habit due to his expected value of control being low. This might be because he does not get any reward from inhibiting the impulse to check Facebook; because he has little confidence in his own ability to suppress this urge (low expectancy); or because the rewards from working on his essay are delayed because it is only due in two months. Alternatively, his goal of working on the essay first thing might not be present in his working memory, in which case no System 2 control is initiated to override the checking habit.

After having opened Facebook, he might remember that he should be working on the essay, but attention-grabbing content from the newsfeed enters his capacity-limited working memory and crowds out this goal, leading him to spend more time on Facebook than intended.

3.3 A review and analysis of current digital self-control tools

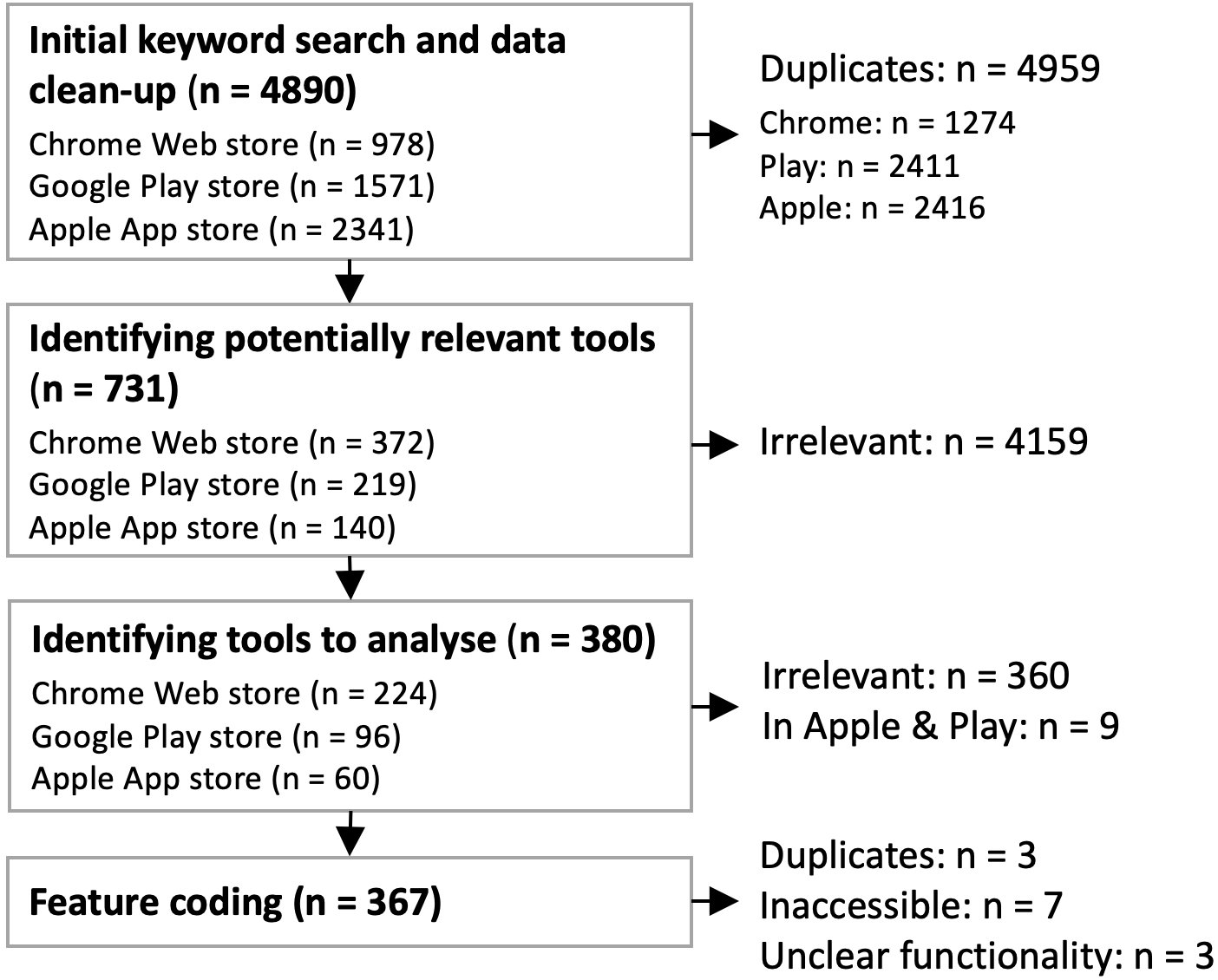

To explore how this model may be useful in mapping digital self-control interventions, we conducted a systematic review and analysis of apps on the Google Play and Apple App stores, as well as browser extensions on the Chrome Web store. We identified apps and browser extensions described as helping users exercise self-control / avoid distraction / manage addiction in relation to digital device use, coded their design features, and mapped them to the components of the dual systems framework.

3.3.1 Methods

3.3.1.1 Initial keyword search and data clean up

For the Google Play and Apple App store, we used pre-existing scripts (Olano 2018a, 2018b) to download search results for the terms ‘distraction,’ ‘smartphone distraction,’ ‘addiction,’ ‘smartphone addiction,’ ‘motivation,’ ‘smartphone motivation,’ ‘self-control’ and ‘smartphone self-control.’ For the Chrome Web store, we developed our own scraper (Slack 2018) and downloaded search results for the same key terms, but with the prefix ‘smartphone’ changed to ‘laptop’ as well as ‘internet’ (e.g. ‘laptop distraction’ and ‘internet distraction’). We separately scraped apps and extensions on the US and UK stores, between 22nd and 27th August 2018. After excluding duplicate results returned by multiple search terms and/or by both the US and UK stores, this resulted in 4890 distinct apps and extensions (1571 from Google Play, 2341 from the App Store, and 978 from the Chrome Web store).

3.3.1.2 Identifying potentially relevant apps and extensions

Following similar reviews (Shen et al. 2015; Stawarz et al. 2018), we then manually screened the titles and short descriptions (if available; otherwise the first paragraphs of the full description). We included apps and extensions explicitly designed to help people self-regulate their digital device use, while excluding tools intended for general productivity, self-regulation in other domains than digital device use, or which were not available in English (for detailed exclusion criteria, see osf.io/zyj4h).

This resulted in 731 potentially relevant apps and extensions (219 from Google Play, 140 from the App Store, and 372 from the Chrome Web store).

3.3.1.3 Identifying apps and extensions to analyse

We reviewed the remaining tools in more detail by reading their full descriptions. If it remained unclear whether an app or extension should be excluded, we also reviewed its screenshots. If an app existed in both the Apple App store and the Google Play store, we dropped the version from the Apple App store.4

After this step, we were left with 380 apps and extensions to analyse (96 from Google Play, 60 from the App Store, and 224 from the Chrome Web store).

Figure 3.2: Flowchart of the search and exclusion/inclusion procedure

3.3.1.4 Feature coding

Following similar reviews, we coded functionality based on the descriptions, screenshots, and videos available on a tool’s store page (cf. Shen et al. 2015; Stawarz, Cox, and Blandford 2014, 2015; Stawarz et al. 2018). We iteratively developed a coding sheet of feature categories (cf. Bender et al. 2013; Orji and Moffatt 2018), with the prior expectation that the relevant features would be usefully classified as subcategories of the main feature clusters ‘block/removal,’ ‘self-tracking,’ ‘goal advancement’ and ‘reward/punishment’ (drawing on previous work, Lyngs (2018a)).

Initially, two collaborators and myself independently reviewed and classified features in 10 apps and 10 browser extensions (for a total of 30 unique apps and 30 unique browser extensions) before comparing and discussing the feature categories identified to create the first iteration of the coding sheet. Using this coding sheet, the two collaborators independently reviewed 60 additional apps and browser extensions each while I reviewed these same 120 tools, as well as all remaining tools. After comparing and discussing the results, we developed the final codebook, on the basis of which I revisited and recoded the features in all tools. In addition to the granular feature coding, we noted which main feature cluster(s) represented a tool’s ‘core’ design, according to the guideline that 25% or more of the tool’s functionality related to that cluster (a single tool could belong to multiple clusters).5

During the coding process, we excluded a further 13 tools — 3 duplicates, e.g., where ‘pro’ and ‘lite’ versions had no difference in described functionality, 7 that had become inaccessible after the initial search, and 3 that lacked sufficiently well-described functionality to be coded. This left 367 tools in the final dataset.

3.3.2 Results

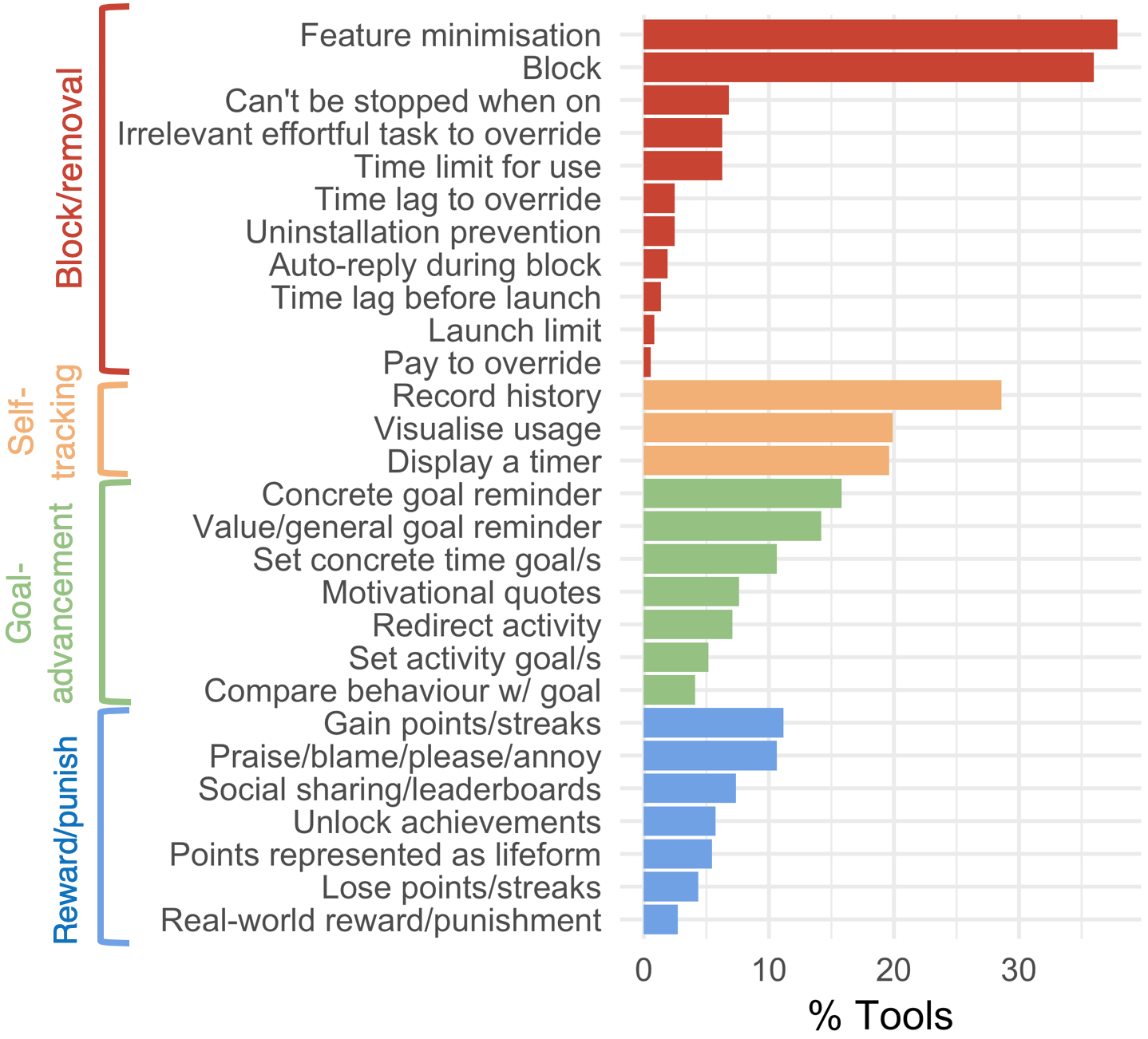

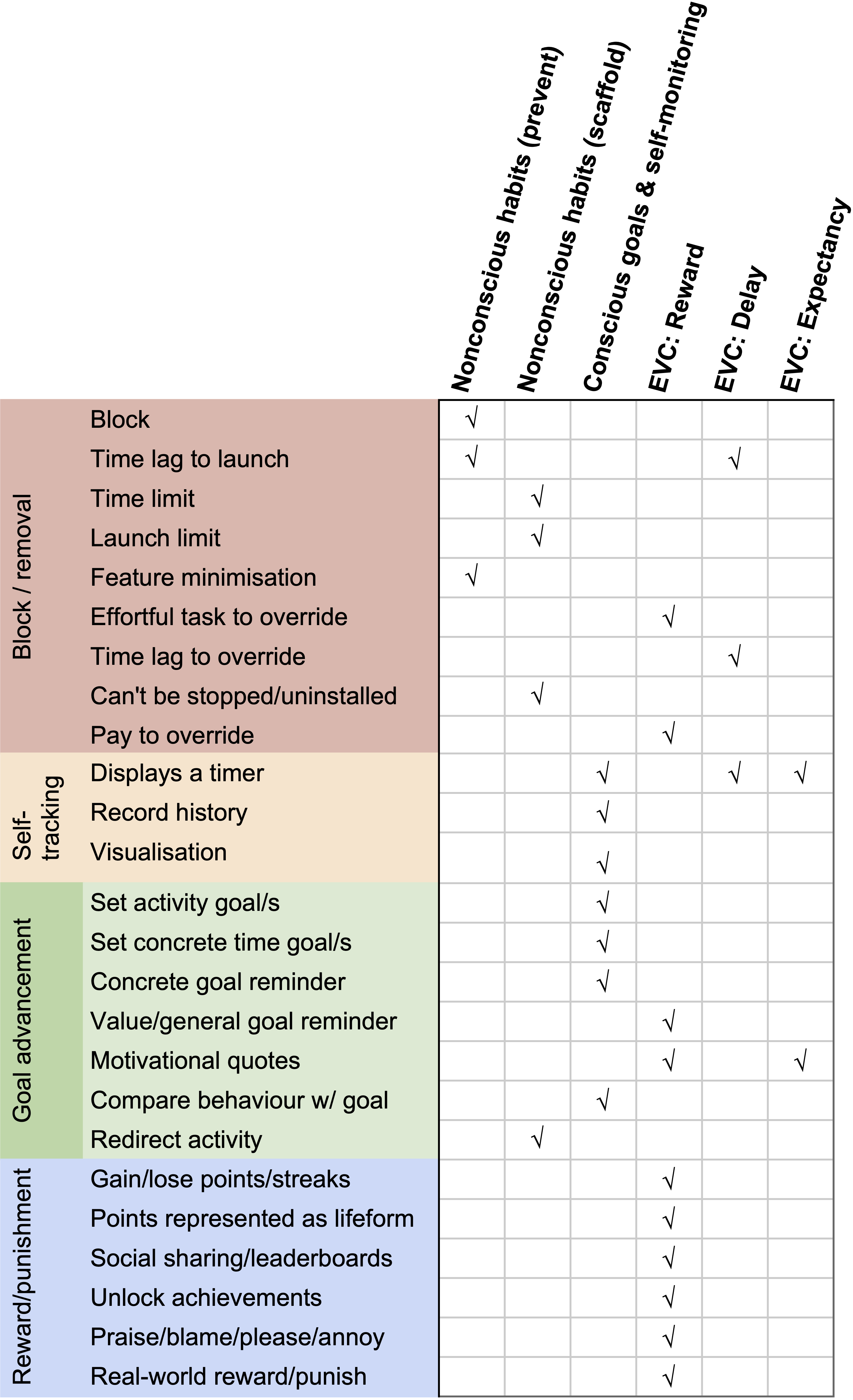

Figure 3.3: Functionality of digital self-control tools (N = 367)

3.3.2.1 Feature prevalence

A summary of the prevalence of features is shown in Figure 3.3. The most frequent feature cluster related to blocking or removing distractions, some variation of which was present in 74% of tools. 44% (163) enabled the user to put obstacles in the way of distracting functionality by either blocking access entirely (132 tools), by setting limits on how much time could be spent (23 tools) or how many times distracting functionality could be launched (3 tools) before being blocked, or by adding a time lag before distracting functionality would load (5 tools). 14% (50 tools) also added friction if the user attempted to remove the blocking, including disallowing a blocking session from being stopped (25 tools), requiring the user to first complete an irrelevant effortful task or type in a password (23 tools), tinkering with administrator permissions to prevent the tool from being uninstalled (9 tools), or adding a time lag before the user could override blocking or change settings (9 tools). For example, the Focusly Chrome extension (Trevorscandalios 2018) blocks sites on a blacklist; if the user wishes to override the blocking, she must type in correctly a series of 46 arrow keys (e.g., \(\rightarrow\uparrow\downarrow\rightarrow\leftarrow\rightarrow\)...) correctly to enter the blocked site.

Rather than blocking content per se, an alternative approach, taken by 38% of tools (139), was to reduce the user’s exposure to distracting options in the first place. This approach was dominated by browser extensions (121 of these tools were from the Chrome Web store) typically in the form of removing elements from specific sites (67 tools; e.g., removing newsfeeds from social media sites or hiding an email inbox). The sites most frequently targeted were Facebook (26 tools), YouTube (17), Twitter (11) and Gmail (7). Also popular were general ‘reader’ extensions for removing distracting content when browsing the web (27 tools) or when opening new tabs (24). Other notable examples were ‘minimal-writing’ tools (22 tools) which remove functionality irrelevant to, or distracting from, the task of writing. Finally, a few Android apps (4 tools) limited the amount of functionality available on devices’ home screen.

The second most prevalent feature cluster related to self-tracking, some variation of which was present in 38% of tools (139). Out of these, 105 tools recorded the user’s history, 73 provided visualisations of the captured data, and 72 displayed a timer or countdown. In 42 tools, the self-tracking features included focused on the time during which the user managed to not use their digital devices, such as the iOS app Checkout of your phone (Schungel 2018).

The third most prevalent feature cluster related to goal advancement, some variation of which was present in 35% of tools (130). 58 tools implemented reminders of a concrete time goal or task the user tried to complete (e.g., displaying pop-ups when a set amount of time has been spent on a distracting site or replacing the content of newsfeeds or new tabs with todo-lists) and 52 tools provided reminders of more general goals or personal values (e.g., in the form of motivational quotes). 58 tools asked the user to set explicit goals, either for how much time they wanted to spend using their devices in total or in specific apps or websites (39 tools), or for the tasks they wanted to focus on during use (19 tools). 15 tools allowed the user to compare their actual behaviour against the goals they set.

The fourth most prevalent feature cluster, present in 22% of tools (80), related to reward/punishment, i.e., providing some form or rewards or punishments for the way in which a device is used. Some of these features were gamification design patterns such as collecting points/streaks (41 tools), leaderboards or social sharing (27), or unlocking of achievements (21). In 20 tools, points were represented as some lifeform (e.g., an animated goat or a growing tree) which might be harmed if the user spent too much time on certain websites or used their phone during specific times. 10 tools added real-world rewards or punishments, e.g., making the user lose money if they spend more than 1 hour on Facebook in a day (Timewaste Timer, Prettymind.co (2018)), allowing virtual points to be exchanged to free coffee or shopping discounts (MILK, Milk The Moment Inc. (2018)) or even let the user administer herself electrical shocks when accessing blacklisted websites (!) (PAVLOK, Pavlok (2018)).

Finally, 35% of tools (129) gave the user control over what counted as ‘distraction,’ e.g., by letting the user customise which apps or websites to restrict access to. Among tools implementing blocking functionality, 101 tools implemented blacklists (i.e., blocking specific apps or sites, allowing everything else), while 22 tools implemented whitelists (i.e., allowing only specified apps or sites while blocking everything else).

3.3.2.2 Feature combinations

65% of tools implemented only one type of feature cluster in their core design, most frequently blocking/removing distractions (53%). 32% (117 tools) combined two main feature clusters, most frequently block/removal in combination with goal-advancement (40 tools; e.g., replacing the Facebook newsfeed with a todo list, or replacing distracting websites with a reminder of the task to be achieved) or self-tracking in combination with reward/punishment features (30 tools; e.g., a gamified pomodoro timer in which an animated creature dies if the user leaves the app before the timer runs out). Block/removal core designs were also commonly combined with self-tracking (24 tools; e.g., blocking distracting websites while a timer counts down, or recording and displaying how many times during a block session the user tried to access blacklisted apps). Only two tools (Flipd, Flipd Inc. (2018), and HabitLab, Stanford HCI Group (2018)) combined all four feature clusters in their core design, with the Chrome extension HabitLab (developed by the Stanford HCI Group) cycling through different types of interventions to learn what best helps the user align internet use with their stated goals (cf. Kovacs, Wu, and Bernstein 2018).

3.3.2.3 Store comparison

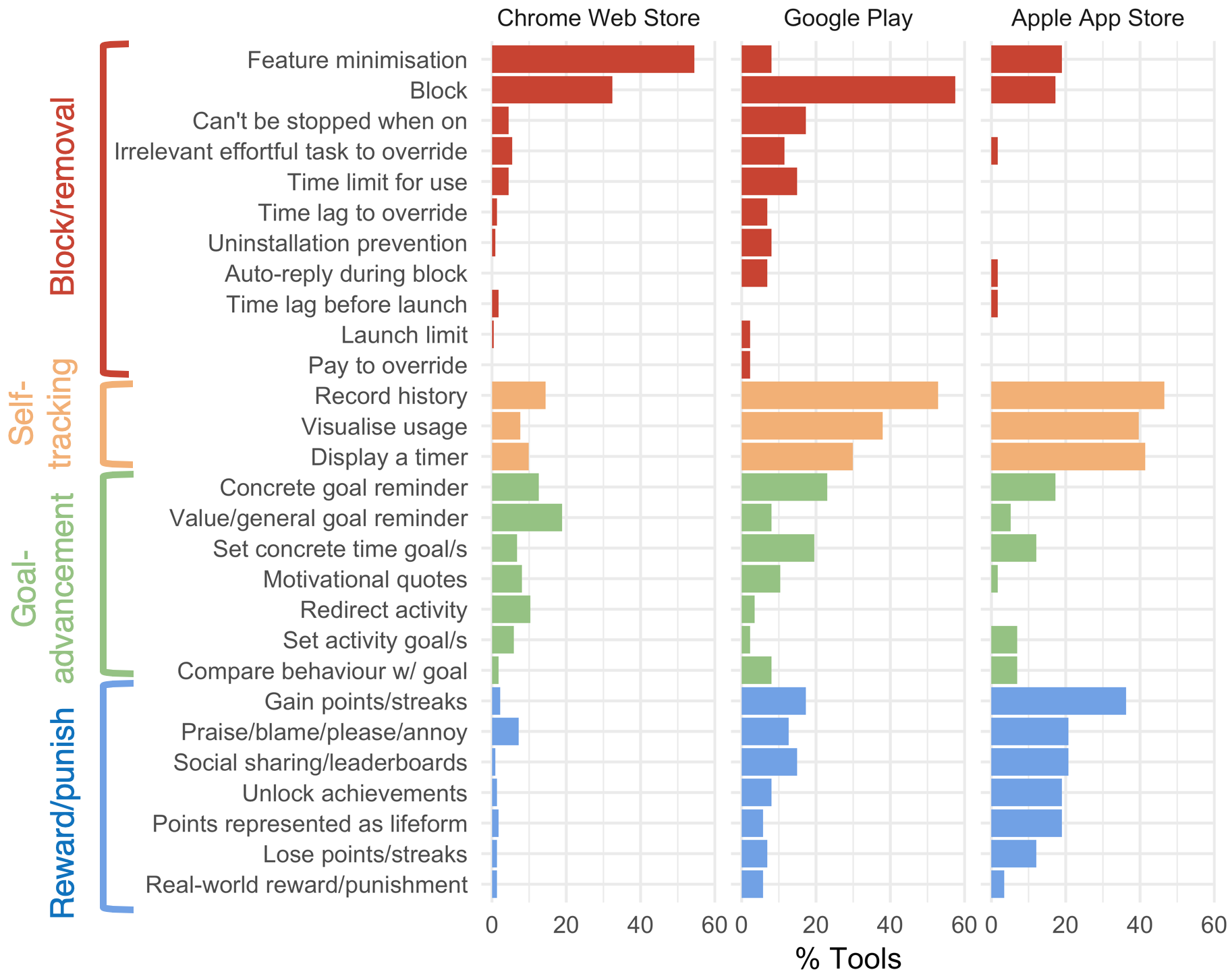

Figure 3.4: Functionality of digital self-control tools on Chrome Web (n = 223), Google Play (n = 86) and Apple App Store (n = 58)

Figure 3.4 summarises the prevalence of features, comparing the three stores. The differences between the stores appear to mirror the granularity of system control available to developers: Feature minimisation, in the form of removing particular aspects of the user interface, is common in browser extensions, presumably because developers here can wield precise control over the elements displayed on HTML pages by injecting client-side CSS and JavaScript. On mobile devices, however, developers have little control over how another app is displayed, leaving blocking or restricting access as the only viable design patterns. The differences between the Android and iOS ecosystems are apparent, as the permissions necessary to implement, e.g., scheduled blocking of apps are not available to iOS developers. These differences across stores suggest that if mobile operating systems granted more permissions (as some developers of popular anti-distraction tools have petitioned Apple to do, Digital Wellness Warriors (2018)), developers would respond by creating tools that offer more granular control of the mobile user interface, similar to those that already exist for the Chrome web browser.

3.3.2.4 Mapping identified tool features to theory

Figure 3.5: Mapping design features to an integrative dual systems model of self-regulation

Figure 3.5 shows a matrix of how the design features correspond to the main components of the dual systems framework, in terms of the cognitive components they have the most immediate potential to influence: Non-conscious habits are influenced by features that block the targets of habitual action or the user interface elements that trigger them, thereby preventing unwanted habits from being activated. Non-conscious habits are also influenced by features which enforce limits on daily use, or redirect user activity, thereby scaffolding formation of new habits. Conscious goals & self-monitoring is influenced by explicit goal setting and reminders, as well as by timing, recording, and visualising usage and comparing it with one’s goals. The reward component of the expected value of control is influenced by reward/punishment features that add incentives for exercising self-control, as well as by value/general goal reminders and motivational quotes which encourage the user to reappraise the value of immediate device use in light of what matters in their life; the delay component is influenced by time lags or timers; and expectancy is similarly influenced by timers (‘I should be able to manage to control myself for just 20 minutes!’) as well as motivational quotes.

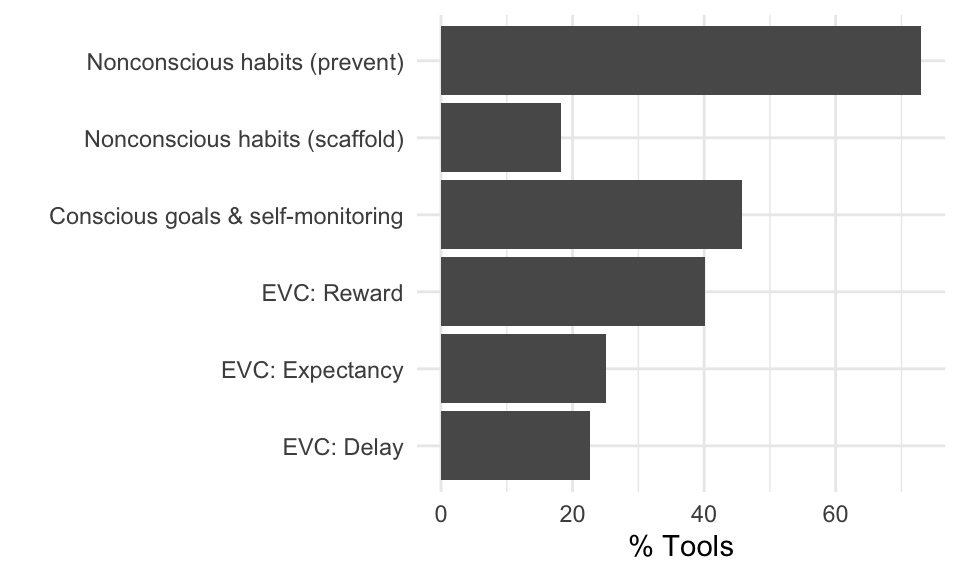

Figure 3.6: Percentage of tools which include at least one design feature targeting a given cognitive component of the dual systems framework.

Given this mapping, the percentages of tools in which at least one design feature maps to a given cognitive component is shown in Figure 3.6. Similarly to DBCI reviews (Pinder et al. 2018; Stawarz, Cox, and Blandford 2015), we find the lowest prevalence of features that scaffold formation of non-conscious habits (18%), followed by features that influence the delay component of the expected value of control (23%). The current landscape of digital self-control tools is dominated by features which prevent activation of unwanted non-conscious habits (73%) and thereby stop undesirable responses from winning out in action schema competition by making them unavailable.

3.4 Discussion

In this chapter, we set out to map the landspace of current digital self-control tools and relate them to an integrative dual systems model of self-regulation. Our review of 367 apps and browser extensions found that blocking distractions or removing user interface features were the most common approaches to digital self-control. Grouping design features into clusters, the prevalence ranking was block/removal > self-tracking > goal advancement > reward/punishment. Out of these, 65% of tools focused on only one cluster in their core design; and most others (32%) on two. The frequencies of design features differed between the Chrome Web Store, Play Store, and Apple App store, which likely reflects differences in developer permissions. When mapping design features to the dual systems framework, the least commonly targeted cognitive component was unconscious habit scaffolding, followed by the delay and expectancy elements of the expected value of control.

We now turn to discuss how these empirical observations can inform future research by pointing to: i) widely used and/or theoretically interesting design patterns in current digital self-control tools that are underexplored in HCI research; ii) feature gaps identified the dual systems framework, showing neglected areas that could be relevant to researchers and designers, and iii) how the model may be used directly to guide research and intervention design. We then outline limitations and future work.

3.4.1 Research opportunities prompted by widely used or theoretically interesting design patterns

The market for DSCTs effectively amounts to hundreds of natural experiments in supporting self-control, meaning that successful tools may reveal design approaches with wider applicability. These approaches present low-hanging fruit for research studies, especially as many are so far lacking evaluation in terms of their efficacy and the transferability of their underlying design mechanisms. As an example, we highlight three such instances:

Responsibility for a virtual creature

Forest (Seekrtech 2018) ties device use to the well-being of a virtual tree. Numerous variations and clones of this approach exist among the tools reviewed, but Forest is the most popular with over 10 million users on Android alone (Google Play 2017). It presents a novel use of ‘virtual pets’ that requires the user to abstain from action (resist using their phone) rather than take action to ‘feed’ the pet, and is a seemingly successful example of influencing the reward component of expected value of control.

Redirection of activity

Timewarp (Stringinternational.com 2018) reroutes the user to a website aligned with their productivity goals when navigating to a distracting site (e.g., from Reddit to Trello), and numerous tools implement similar functionality. Such apps seem to be automating ‘implementation intentions’ (if-when rules for linking a context to a desired response, Gollwitzer and Sheeran (2006)), an intervention which digital behaviour change researchers have highlighted as a promising way to scaffold transfer of conscious System 2 goals to automatic System 1 habits (Pinder et al. 2018; Stawarz, Cox, and Blandford 2015).

Friction to override past preference

A significant number of tools not only allow the user to restrict access to digital distractions, but also add a second layer of commitment, e.g., by making blocking difficult to override, as in the browser extension Focusly (Trevorscandalios 2018), which requires a laborious combination of keystrokes to be turned off. This raises important design and ethical questions about how far a digital tool should go to hold users accountable for their past preferences (cf. Bryan, Karlan, and Nelson 2010; Lyngs et al. 2018).

3.4.2 Gaps identified by the dual systems framework

By applying the dual systems framework, we also identified three cognitive mechanisms that appear underexplored by current digital self-control tools. Focusing on these mechanisms might lead to new powerful approaches to digital self-control:

Scaffolding habits

Similar to the situation in general DBCIs (cf. Pinder et al. 2018; Stawarz, Cox, and Blandford 2015), the least frequently targeted cognitive component relates to scaffolding of new, desirable unconscious habits (as opposed to preventing undesired ones from being triggered via blocking or feature removal). Habit formation is crucial for long-time behaviour change, and in the context of DBCIs, Pinder et al. (2018) suggested implementation intentions and automation of self-control as good candidate design patterns for targeting habit formation. We note that some such interventions are already being explored amongst current digital self-control tools: apart from the tools mentioned above that redirect activity, four tools allow blocking functionality to be linked to the user’s location (e.g., AppBlock, Mobilesoft (2019), and Bashful Runnably (2019)). I expect this to be a powerful way to automatically trigger a target behaviour in a desired context.

Delay

The delay component of expected value of control is also less commonly targeted: the number of tools including functionality targeting delay drops to 4% if we exclude the display of a timer (which raises time awareness rather than affecting actual delays). This is surprising from a theoretical perspective, because the effects on behaviour of sensitivity to delay are strong, reliable, and—at least to behavioural economists—at the core of self-control difficulties (Ariely and Wertenbroch 2002; Dolan et al. 2012). Even if rewards introduced by gamification features may have the side effect of reducing delay before self-control is rewarded, it remains surprising that only two of 367 reviewed tools directly focus on using delays to scaffold successful self-control (Space, Boundless Mind Inc (2018), increases launch times for distracting apps on iOS; Pipe Clogger, Croshan (2018), does the same for websites). As previous research has found people to be especially sensitive to delays in online contexts (Krishnan and Sitaraman 2013), I expect interventions that leverage delays to scaffold self-control in digital environments to be highly effective.

Expectancy

The expectancy component (i.e., how likely a user think it is that she will be able to reach her goal through self-control exertion) was also less frequently targeted, and mainly through timers limiting the duration where the user tried to exert self-control. Given the crucial role of self-efficacy in Bandura’s influential work on self-regulation (Bandura 1991), this may also represent an important underexplored area. One interesting approach to explore is found in Wormhole Escaper (Bennett 2018) which lets the user administer words of encouragement to themselves when they manage to suppress an urge to visit a distracting website. In so far as this is effective, it may be by boosting the user’s confidence in their ability to exert self-control.

3.4.3 Using the dual systems framework directly to guide intervention research and design

The abstracted nature of the dual systems framework enables it to be utilised on different levels of analysis to inspire new avenues for research as well as drive specific design:

For researchers, the framework may be used to organise existing work on design patterns for digital self-control by the cognitive components they target, as well as a roadmap for future studies that focus on different components of the self-regulatory system. Whereas many theories and frameworks are on offer for this purpose, one advantage of the dual systems approach is that it provides HCI researchers with clear connections to wider psychological research on basic mechanisms of self-regulation, which can be utilised in design (cf. section 7.3).

As such, the framework may be used as a starting point for design consideration that is aligned with the cognitive mechanisms involved in self-regulation; its components can be readily expanded if inspiration from more fine-grained theoretical constructs and predictions is required. For example, the ‘reward’ component readily expands into more specific models explaining the types of stimuli that may be processed as rewards; how timing of rewards impact their influence; how the impact of gains differ from losses; and so on (Berridge and Kringelbach 2015; Caraco, Martindale, and Whittam 1980; Kahneman and Tversky 1979; Schüll 2012). As we saw in Chapter 2, existing studies have already benefitted from use of more narrow theories, such as Y.-H. Kim et al. (2016)’s reliance on work on differential sensitivity to gains vs. losses in evaluations of their tool TimeAware.

3.4.4 Limitations

Our review has some limitations. First, the tool analysis presented in this chapter is focused on functionality — in Chapter 4, we supplement this with analysis of information drawn from user numbers, ratings, and reviews.

Second, the dual systems framework we have applied points to directions of future research, but its high-level formulation leaves its cognitive design space under-specified. How precisely one should be able to anchor details of specific interventions directly in causal theories is a point of longstanding debate (Ajzen 1991; Hardeman et al. 2005; Michie et al. 2008). As mentioned above, however, a main benefit of the dual systems framework is that while concise, it remains directly grounded in well-established basic research on self-regulation. As mentioned above, this means that each component of the model has substantial literature behind it, so that more detailed specifications and predictions can be found in lower-level theories on demand.

3.5 Conclusion

In this chapter, we have started to address the main research question of the present thesis in two ways: (i) by providing the first comprehensive functionality analysis of current apps and browser extensions for digital self-control on the Google Play, Chrome Web, and Apple App stores, and (ii) by applying a well-established framework of self-regulation to evaluate their design features and provide a mechanistic understanding of the problem they address.

In the next chapter, we extend this chapter’s analysis of tool functionality with an analysis of these tools’ user numbers, average ratings, and public reviews.